|

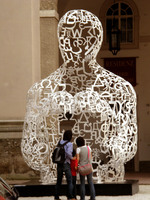

| 'Book stack' by ginny under a CC license |

There is even a book on 'rebooting civilization' and I'm sure that there plenty more works on that question, which - by the way - is not at all uncommon.

The second part of the question seems simpler. There is no ultimate backup medium and we already know that the internet is no safe bet. Modern technology is good and sleek but it can fail, too (plenty of personal experience on that front). So, really, modern non-magnetic storage media, such as DVD's, seem like a decent backup solution but they haven't been tested against time, yet. Magnetic media are now reliable for operation in the scale of 5-10 years but one shouldn't expect miracles. The 'cloud' could do better, since the storage equipment is maintained but then access to the stored data can't be guaranteed. For an digital storage medium and format there's the additional challenge of compatibility with future (or past) equipment.

I hate to admit that but as a storage medium, paper has served us reasonably well. Despite it being fragile, compostable, flammable, etc. Amazing, isn't it? And by some tricks we could store even more per page, even though that would make pages illegible to humans (for example, the QR code below, contains the first 2 paragraphs of this blog entry - and, yes, it can be printed smaller and still be readable by a smartphone).

To settle the argument, let's say that we use a combination of media and storage methods to be on the safe side. What should we put on those? The Survivor library that the article mentioned has an interesting selection of topics that range from 'hat making' and 'food', to 'anesthesia' and 'lithography'. Several state-of-the-art areas are missing (but that may be the point) and so do some well-established disciplines such as mathematics and physics, while with some topics, we could possibly do without.

Some have proposed keeping a copy of Wikipedia at a safe place. Yes, Wikipedia can be downloaded (its database dump, at least) and the size - so far - is said to be about 0.5 Tb (i.e., 512 Gb or about common 110 DVDs) with all the media files included.

There are also several physical and digital archives. Some specialised, other more general. The Internet Archive is an interesting approach, as it keeps snapshots of the various websites at various times. Not necessarily useful for the survival of mankind but interesting, anyway.

Another tricky bit that may not be apparent is that knowledge can only be effectively used by skilled people. So, not only do we need the knowledge but also a group of people with sufficient expertise to put that knowledge in good use. And then we need materials, resources, tools to allow for knowledge to be put into practice....

Hmmm.... Saving the civilisation seems to need a lot of thinking, after all :)